KEYNOTE SPEAKERS:

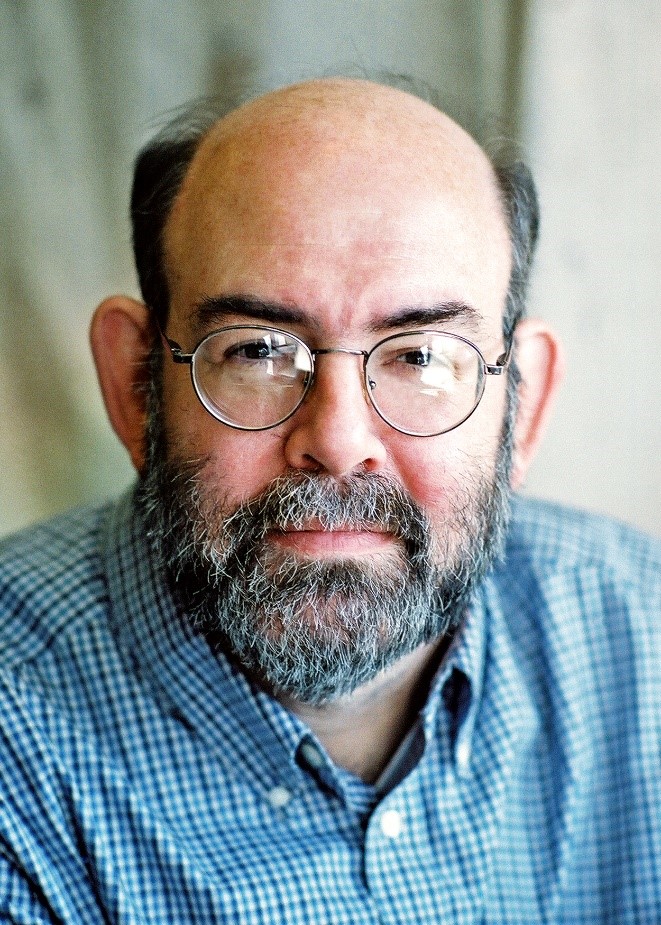

Mitch Marcus is the RCA Professor of Artificial Intelligence Emeritus in the Department of Computer and Information Science at the University of Pennsylvania. He was a Member of Technical Staff at AT&T Bell Laboratories before coming to Penn in 1987. He served as chair of Penn's Computer and Information Science Department, as chair of the Penn Faculty Senate, as well as president of the Association for Computational Linguistics. He currently serves as chair of the Advisory Committee of the Center of Excellence in Human Language Technology at John Hopkins University and as Technical Advisor to that Center. He was named a Fellow of the American Association of Artificial Intelligence in 1992 and a Founding Fellow of the Association for Computational Linguistics in 2011.

He created and ran the Penn Treebank Project through the mid-1990s which developed the primary training corpus that led to a breakthrough in the accuracy of natural language parsers. He was a co-PI on the OntoNotes Project and PI for an ARO-funded MURI project to investigate natural language understanding for human-robot interaction with co-PIs at Stanford, Cornell, UMass Amherst, UMass Lowell and George Mason. Until recently he was PI of a project on completely unsupervised morphology acquisition under DARPA LORELEI, working with Prof. Charles Yang in Linguistics and is currently working with a team at ISI Boston on simulations of child language acquisition funded by the DARPA GAILA AIX Program. His current research interests include: statistical natural language processing and cognitively plausible models for automatic acquisition of linguistic structure.

https://www.cis.upenn.edu/~mitch/

Yue Zhang is currently an associate professor at Westlake University. Before joining Westlake in 2018, he worked as an assistant professor at Singapore University of Technology and Design and as a research associate at University of Cambridge. Yue Zhang received his PhD degree from University of Oxford in 2009 and his BEng degree from Tsinghua University, China in 2003. Yue Zhang's research interest lies in fundamental algorithm for NLP, syntax, semantics, information extraction, sentiment, text generation, machine translation and dialogue systems. He serves as the action editor for Transactions of Association of Computational Linguistics (TACL), and area chairs of ACL (2021, 20, 19, 18, 17), EMNLP (2021, 20, 19, 17, 15), COLING (2018, 14) and NAACL (2021, 2019, 15). He gave several tutorials at ACL, EMNLP and NAACL, and won awards at SemEval 2020 (best paper honorable mention), COLING 2018 (best paper) and IALP 2017 (best paper).

Chungmin Lee attended the College of Liberal Arts and Sciences, Seoul National University and its Graduate School, completing a BA and MA in English (Linguistics), and then majored in linguistics at the Graduate School of Indiana University Bloomington as a Fulbright fellow, receiving his Ph.D. in 1973. He taught at Seoul National University on his return home and became assistant professor at the Department of Linguistics in 1974, moving from an associate professor, professor and then professor emeritus in 2005. He taught semantics, syntax, linguistic theory, language and humans, mathematical linguistics in the Department of Linguistics and ‘syntactic-semantic structures and cognition’ in the Cognitive Science Program he established at Seoul National University. He served as Director, Institute for Cognitive Science, Seoul National University from 1999-2001 and serves as Honorary President of the International Association for Cognitive Science, 2008-present. He was (visiting) professor at UCLA,1986-1988 and professor at the Linguistic Society of America Institute, held at UCSC, UCSB, Michigan State University in the Summers of 1991, 2001, 2003, teaching structure of Korean and semantics. He was a visiting professor at City University of Hong Kong in the Winters of 2010 and 2011, giving graduate seminars in semantics. He is the (leading co-)editor of numerous books such as Lee, C., M. Gordon, D. Buring (eds) 2007 Topic and Focus, Springer; Lee, C., F. Kiefer, M. Krifka (eds) 2017 Contrastiveness in Information Structure, Alternatives and Scalar Implicatures, Springer; Larrivee, P. and C. Lee (eds) Negation and Polarity: Experimental Perspectives, LCAM No. 1, Springer; Pustejovsky, J., --- C. Lee (eds) 2013 Advances in Generative Lexicon Theory, Springer; Lee, C., G. Simpson, Y. Kim (eds) 2009; Lee, C., J. Park (eds) 2020 Evidentials and Modals, CRiSPI 39, Brill; Lee, C., Y. Kim, B. Yi (eds) 2021 Numeral Classifiers and Classifier Languages: Chinese, Japanese, and Korean, Routledge. He also has written several books such as 1973 Abstract Syntax and Korean with Reference to English, Thaehaksa, and2020 Semantic-Syntactic Structures and Cognition, Hankuk, in Korean. He has published in leading international journals such as Papers in Linguistics, Foundations of Language (continued as Linguistics and Philosophy), Linguistic Inquiry, Language, Language Sciences, Journal of Cognitive Science. His research ranges from meaning and mind in general to cross-linguistic phenomena of speech acts and information structure, negative polarity, tense-aspect-modality-evidentiality and factivity. He served/serves as editor/editorial board (EB) member of various journals and edited volumes such as Journal of East Asian Linguistics, 1992-2002; Linguistics and Philosophy, EB, 1997-2004; Pragmatics (IPA), editor, 1997-2005; Current Research in the Semantics/PragmaticsInterface (CRiSPI, book series), EB, 1999-present; Journal of Cognitive Science, Editor-in-Chief (as founder), 2000-present; Journal of Pragmatics, Board of Editors, 2005-present; Lingua, EB, 2011-2016, 2019-present; Glossa,EB, 2016-2019; Language, Cognition, and Mind (LCAM, book series), Editor. He was elected as Member, The National Academy of Sciences, Republic of Korea, 2014-present.

Francesca Strik Lievers is an Assistant Professor in Linguistics at the Department of Modern Languages and Literatures at the University of Genoa, Italy. After receiving her PhD in Linguistics from the University of Pisa, she has worked at various institutions in Italy and at the Hong Kong Polytechnic University, and has been a visiting researcher at the University of Amsterdam, Radboud University of Nijmegen, University of Oxford, and Institut Jean Nicod. Her main research interests are in lexical semantics and figurative language, and she is particularly interested in the linguistic encoding of perceptual experience.

Dr. Lin Jingxia is currently an associate professor at School of Humanities, Nanyang Technological University Singapore. She received her PhD in Chinese linguistics from Stanford University in 2011. Prior to joining NTU Singapore in 2013, she was a postdoctoral fellow at the Hong Kong Polytechnic University (2011-2012). Her research interests include syntax-semantics interface, language variation and change, and typology. In addition to Putonghua, she also works on Singapore Mandarin Chinese and the Wenzhou Wu dialect.

KEYNOTE TALKS:

Language learning: Artificial Neural Nets vs. Real Neurons ABSTRACT: Unsupervised learning of natural languages by neural nets requires 10^8 or more words or characters of text. But children learn within a year or two with on the order of 10^3 input tokens. How is that? ... This talk will survey a research program of my research group over many years investigating what clues children might use to learn language efficiently and how we might exploit these clues to build efficient unsupervised language learners. |

Guiding Document Encoding For Sequence-To-Sequence Tasks—A Soft Constraint Method ABSTRACT: Encoding long documents can be challenging in sequence-to-sequence tasks due to much increased input length as compared wo sentence level tasks. On the one hand, separately encoding each sentence has the limitation of losing useful discourse and coreference information; on the other hand, encoding the document as a single unit can lead to diluted focus in the decoder. This issue has been exemplified in neural machine translation, where it has been shown difficult to achieve training convergence using a standard Transformer for document encoding. We address the above issue by using soft constraints to guide the decoder. In particular, a set of group tags, resembling positional embedding vectors, are used to guide attention from the decoder to the encoder. For neural machine translation, we use such soft constraints to guide the current sentence being translated; for abstrative rewriting of extractive text summarization, we use constraints to guide the decoder towards the extracted content being rewritten. Results show that the soft constraints are quite effective, facilitating model convergence and leading to the best results. |

Typology Of Factivity Alternation In Different Languages ABSTRACT: This talk aims to explore the typology of factivity alternation in different languages to seek the ultimate goal of semantic universals of possible factive and non-factive alternants of epistemically-oriented attitude predicates. Korean and other Altaic languages show factivity alternation of the epistemically-oriented cognitive attitude verb ‘know’ and such verbs as ‘remember,’ ‘understand,’ and ‘recognize’ between factive vs. non-factive (Lee 1978, 2019). In contrast, Chinese rigidly reveals a typical type of non-alternating language; its cognitive epistemic verb zhidao ‘know’ is constantly factive and has no non-factive alternant, along with related attitude verbs such as mingbai/lijie ‘understand,’ yishidao ‘recognize,’ etc. However, the attitude verb jide ‘remember’ alone reveals factivity alternation with factive vs. non-factive alternant readings, with no syntactic differences in its embedded complement clauses. English is close to a non-alternating language; the verb know belongs to a cognitive factive verb, although it shows exceptional non-factive readings in presupposition-cancelling contexts such as some negation, interrogative, or before. Altaic gives some clue to alternation with its complementation typing: the thematic argument clausal DP (ACC) is embedded by the factive alternant and the REPORTative C(omp) [with covert SAY] finite mood clause is embedded by the non-factive alternant of an epistemically-oriented attitude verbs ‘know’ and ‘remember.’ Such principled decomposition and compositionality may lead to semantic universals of possible structural and contextual factive and non-factive alternants of attitude predicates. Factivity is not a myth. |

Sensory language and (cross)sensory metaphors ABSTRACT: Language allows us to talk about what we perceive, but sensory words available in the lexicon of individual languages tend to be distributed unevenly across the senses. In English, for instance, there are many words for sounds, but only few for smells. Interestingly, when we use words from one sense to describe perceptions in another sense, typically through synaesthetic metaphors, we also find asymmetries between the senses. Corpus-based studies show that in many languages it is for example common to use a touch adjective to modify a hearing noun, as in “warm voice”, while finding a hearing adjective modifying a touch noun is less likely. In this talk, I will discuss such asymmetries in the sensory lexicon and in synaesthetic metaphors and examine possible motivations for both. |

Scale in Mandarin Chinese ABSTRACT: This talk introduces the notion of scale and shows how it may help to better explain the syntactic and semantic features of verbs, adjectives, and preposition phrases in Modern Mandarin Chinese. The topics to be covered in this talk include the aspectual classification of verbs, the quantitative denotations of simple adjectives, and the word order of spatial preposition phrases. |

Back

Back

Office Hour: 9:00-17:00

Office Hour: 9:00-17:00 Office: +86-21-67705180

Office: +86-21-67705180 Email: 2020215@shisu.edu.cn

Email: 2020215@shisu.edu.cn